I’m taking a coffee break (actually two, as I also created a diagram for this post) to quickly share today’s routing fun with you – a brief look at when BGP aggregates go bad. Or at least, an obvious but easily overlooked side effect of adding a BGP aggregate.

The Scenario

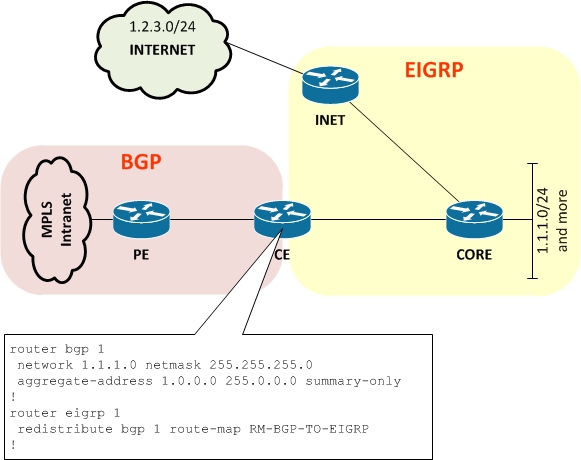

Here’s the basic connectivity to be looked at, along with a config snippet that I’ll reference:

Topology

I have an HQ site – comprised of INET, CORE and CE routers, running EIGRP as the IGP. There are two ways for data to get ‘out': the first is to the Internet via default route, and the second is to access the rest of the corporate Intranet by way of an MPLS service. This HQ site acts as the hub for the remote sites – it’s where the web proxies are located – and there is no default route on the MPLS. Instead, routes shared to the Intranet are those of the HQ site, which includes the web proxies.

The HQ site has quite a few local subnets active in the 1.0.0.0/8 range, but for legacy reasons, access to 1.2.3.0/24 – and scattering of other subnets in that range – is via the Internet.

Routing

As shown in the configuration snippet above, the CE router generates a BGP aggregate for 1.0.0.0/8 and has network statements for a number of the local subnets (I have showed just 1.1.1.0/24 for simplicity). That injects the HQ routes into BGP so that the the other sites on MPLS know where the HQ ranges are.

Similarly, the routes for remote sites learned via MPLS are redistributed into EIGRP at HQ via a route-map that sets metrics and whatever else is needed.

So far so good.

The Change – BGP Aggregate

Originally, the HQ site was just advertised using the network statements. This was a pain as more subnets were enabled at HQ, as it means updating the configuration on the CE router constantly. Instead, the decision was taken to advertise a 1.0.0.0/8 BGP aggregate to minimize future change requirements.

The Problem

The day after the change, access to 1.2.3.0/24 was reported as being unavailable.

So, do you know what happened?

The Reason

It’s probably obvious, but the 1.0.0.0/8 BGP aggregate address was being successfully generated by BGP and advertised to the MPLS cloud. Unfortunately, it was then also being redistributed from BGP into EIGRP just like it was configured to do. So now, the HQ site not only had a whole list of 1.x.x.0/24 routes and a default, but it now also had 1.0.0.0/8 in EIGRP, pointing back to the CE router, so any local servers sending to 1.2.3.0/24 saw their traffic, rather than following a default route to the Internet, going to the CE instead. The CE of course null-routed traffic for 1.2.3.0/24 because it didn’t actually have a route (and generation of an aggregate automatically created a null route for the aggregate range).

I believe this null route is the reason BGP believes the aggregate is fair game to redistribute. From memory, if a BGP network statement is triggered by an IGP, it wouldn’t get redistributed back into that IGP. When you generate an aggregate however, IOS automatically creates a magical route to null0 (magical because it’s not shown in the configuration) – and that means the aggregate is considered directly connected.

R1#sh run | I 1.0.0.0 aggregate-address 1.0.0.0 255.0.0.0 summary-only

R1#sh ip ro 1.0.0.0 255.0.0.0 Routing entry for 1.0.0.0/8 Known via "bgp 1", distance 200, metric 0, type locally generated Redistributing via eigrp 1 Routing Descriptor Blocks: * directly connected, via Null0 Route metric is 0, traffic share count is 1 AS Hops 0

As a side note, I have a memory that older versions of IOS (11.x for example) used to actually show a static null route in the configuration after you configured an aggregate route (in fact, I seem to recall having a discussion about this with the proctor when I took my CCIE R&S Lab). The suppression of that route is, I believe, a more recent change of behavior.

Solutions

A few possibilities here I suppose.

- Inject 1.2.3.0/24 (and the other routes) into EIGRP at the INET router. Sadly this may mean more configuration in the future.

- Stop generating an aggregate on the CE. This may also mean more configuration in the future.

- Perhaps inject a default route to MPLS from the HQ site, and remove the aggregate. That would work, but goes against the idea that there’s no default on the remote sites and they have to use a proxy.

- Manage your IP address allocation so that you don’t have ranges split between Intranet and Internet? Not always easy to go back and fix the legacy of organic growth.

- Stop the aggregate being redistributed into EIGRP. This sounds like a winner to me if we want to keep the aggregate in place on the MPLS, but not affect local routing.

Configuring a Fix

In its simplest form we want to block locally-originated BGP routes from being injected back into EIGRP. This is a lone BGP router (I’m not receiving other routes via iBGP), so let’s try inserting this at the top of the BGP to EIGRP route-map, then:

ip as-path access-list 1 permit ^$ ! route-map RM-BGP-TO-EIGRP deny 1 description Don't redistribute locally-originated BGP routes match as-path 1 !

That should do the trick. And next time I generate an aggregate, I’ll be proactively looking for unusual routing situations like this.

More Ideas?

Any thoughts on possible solutions and caveats? Bear in mind that the scenario I described is not entirely a match to the real situation – I changed things around a bit for the purposes of simplifying the explanation.

If you liked this post, please do click through to the source at When BGP Aggregates Go Bad and give me a share/like. Thank you!